The pros and cons of systematic global processing

=>

=>

As the resolution of our satellite missions improve, the data volume of output products increases, and the share of the computing and storage costs of its products increases too.

Let’s assume we are preparing a new satellite mission, for instance a Sentinel-like mission, with the hope of potential use by operational or private users, as well as scientists, of course. These applications could be for instance: estimating yields, biomass, evapotranspiration, detecting crop diseases, deforestation or monitoring snow melt… These applications could be performed at continental, country or region scales.

This mission will acquire data globally, and produce each day at least a tera byte (TB) of products (L1C) which are then transformed into L2A, L2B, L3A… At the end of the satellite life, let’s assume the mission totals a dozen of peta bytes (PB) during the satellite lifetime, and requires 1000 cores to produce the data in near real time.

In the early stages of a mission, when the ground segment is being defined, the following question usually arises: which of the following choices should we select ?

- a global production, in near real time, with data stored indefinitely, reprocessed when a new better version is available,

- or a production on-demand, where the needed products are only generated when someone asks for them ?

In my opinion, the production should be global and systematic. Here’s why.

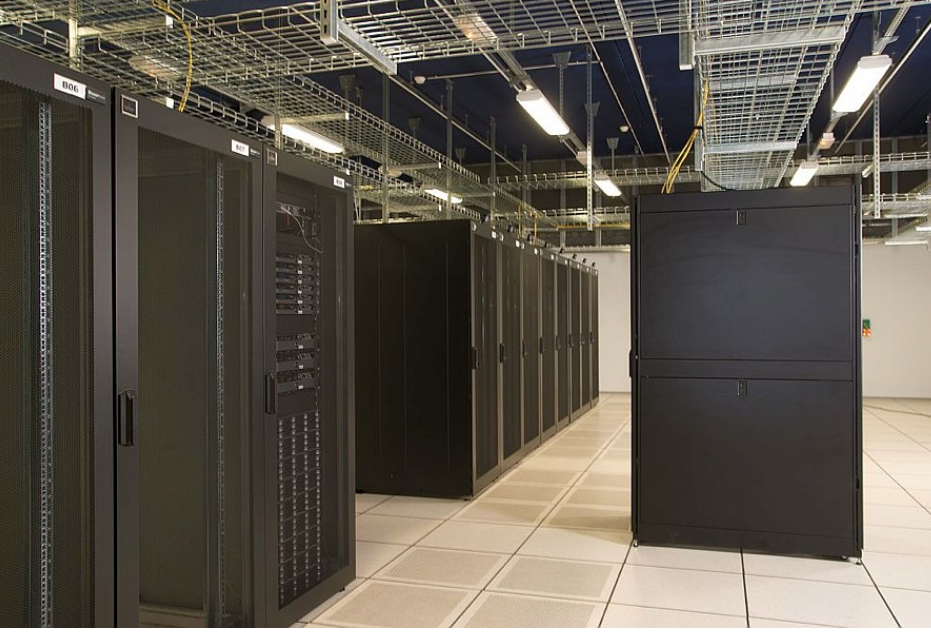

Processing costs: hardware

Cost of global processing

I am not a specialist, but I have colleagues who are, and who found the costs of a computer center of the size that would be necessary to process the data of a Sentinel-like mission with only one satellite. These costs include maintenance, power…

| Storage | Processing | |

|---|---|---|

| Per year | 100 k€/PB/Year | 100 k€/1000 cores/year |

| Total/7years (3000 cores/12 PB) | 4.2 M€ | 2.1 M€ |

If we need up to 12 PB at the end of the 7 years life, it is almost zero at the beginning. Therefore, storing all the data requires an average of 6 PB during 7 years, or 4.2 M€. After the satellite end of life, data are still useful. As a result, storage should also go on, with the full cost of 12 PB. However, the data could be stored on tapes, with a longer access, but a much lower cost, and we can still hope storage costs and carbon footprint continue to decrease with time.

For a global production of medium resolution data with revisit, the need of processing capacity is at least 1000 cores. Of course it depends on the mission and methods used. It is also necessary to allow reprocessing (because who does a perfect processing at once ?), and a reprocessing needs to run at least 3 times faster than the real time processing. Even with such performances, the final reprocessing at the end of life takes two years ! As a result, at least 3000 cores are necessary, for a total of 2.1 M€ for seven years.

With 12 peta bytes and something like 3000 cores, we should have a total cost (including maintenance, energy…) in hardware around 7 M€. This is less than 5% of the cost of a one satellite Sentinel-like mission, but still a lot.

Cost of on-demand processing

Sizing the cost for an on-demand production is much more difficult, as it depends on how many users will ask for it. As a result, the selected solution will need monitoring and adaptability, and probably some over-sizing. Of course, there is a large cost reduction in storage, as only temporary storage is needed. In case of success, if each site is processed several times for different users, the processing cost might be greater than that of the systematic production.

Moreover, if data produced on-demand are not kept on the project storage, users will be attempted to store the on-demand products produced for them on their premises.

If we try to provide numbers, a capacity of less than 10% of the global and systematic production is necessary for the storage, and 20% to 50% for the processing.

Carbon budget

Outside the cost, the carbon budget of an on-demand solution is also much better. Most of the carbon, especially in France where electricity is low-carbon, comes from the hardware manufacturing. It is therefore probably proportional to the investment cost.

However, computation experts say that the CPU node has its best yield when it is used at least 80% of the time. As a result, the yield of nodes used for on-demand production, with random variations of production demands, would be lower than that of a well scheduled global production.

Of course it is essential to try to optimise the computing and storage volumes whatever the selected solution is.

Processing costs: the software and exploitation

Processing software is expensive too: you need a data base, a scheduler, processing chains, monitoring and control software. But whether it is for on demand or systematic global production does not change the cost much. On demand production is maybe a little more complex, as it means support for users, development of interfaces, documentations. But a global production in near real time requires complex monitoring solutions

Of course, hybrid solutions exist, processing one part of the globe systematically, and offering the remaining parts on demand. Regarding softwares, it is probably a bit more expensive as it requires implementing both solutions.

Pros and cons of each solution

Besides the costs, described above, each solution has its pros and cons :

Systematic production

Advantages

- Data are available everywhere without delay. Users may use these data efficiently with cloud solutions.

- Data can be harvested by other processing centers

- It is possible to create downstream products on large surfaces efficiently, with near real time processing if necessary.

- Comparison with older data is easy. Scientists like to observe trends, which can be difficult if you need to ask for a reprocessing before that.

- Data are always available on the mission servers, users do not need to save the data on their own disks, duplicating the archive

Drawbacks

- Some of the regions produced might never be downloaded, processing capacity and storage can be used while not necessary. However, this drawback disappears as soon as there is a global production of some variable

- When a new version of processors is available, it takes a long time to reprocess and update the versions

- Larger cost (even if those are small amounts compared to the total cost of the mission)

- Larger carbon emissions (even if those are small amounts compared to the total carbon budget of the mission). Moreover, Sentinel-like mission data are used to try to monitor and reduce carbon emissions.

On-Demand processing

Advantages

- Only the needed products are processed

- The processing can always be done with the last version

- Global reprocessing is not necessary

- Reduced cost (even if those are small amounts compared to the total cost of the mission)

- Reduced carbon emissions (even if those are small amounts compared to the total carbon budget of the mission)

Drawbacks

- Processing takes time, all the more if some methods used to process data require to process them in chronological order (such as MAJA). In that case, a time series can’t be processed in parallel

- As data are not stored permanently on the project servers, processing on the « cloud » is not optimised. The data might be erased before the user who asked for them has finished his work. As a result, the user needs to download the data.

- Satellite telemetry usually comes in long chunks: processing even a small area of interest (AOI) requires accessing a large volume of data. This drawback is exacerbated for missions with a wide field of view, in which an AOI is seen from different orbits.

- It is hard to estimate the capacity and the computer power necessary to answer the demand. As a result, it requires studies of user demand, and the solution should be quickly adaptable, and maybe over-sized

- If the mission is a success, some regions or countries might have to be processed several times, reducing the gain of on-demand processing.

- On-demand processing prevents any global processing, or even continental scale processing. Even country scale might be problematic.

- Near real time processing is not possible

- Users might be deterred by the processing latency and decide to give up on the mission, or prefer another one, even if it is not the best choice for their application. This is especially important for new missions, where complexity in access might prevent the easy discovery of the interest of the data.

- The mission will not have the impact it might have had with systematic processing

Conclusions:

The main advantage of on-demand production is its reduced cost. However, this cost remains small compared to overall mission cost. The carbon budget plays in favor of the on-demand too, but it is probably a small amount compared to the satellite budget. As a result, making full use of the satellite is probably better. And this is even truer if the satellite is used to monitor the environment and help taking decisions to reduce our carbon footprint. Anyway, processors and storage should be optimised of course.

On the other hand, the long list of drawbacks of on-demand processing speaks for itself. It would clearly result in a much less useful mission.

Of course there are hybrid solutions where some regions/countries/continents are processed systematically, and others on-demand. It only changes the proportions of pros and cons of each solution, and may introduce difficulties in case of change of versions between each type of processing.

To conclude, in my opinion, on-demand processing is only interesting if we plan that that mission will not be a success among users. But in that case, do we really need that mission ?