Free and open data: fine, but who pays for the processing?

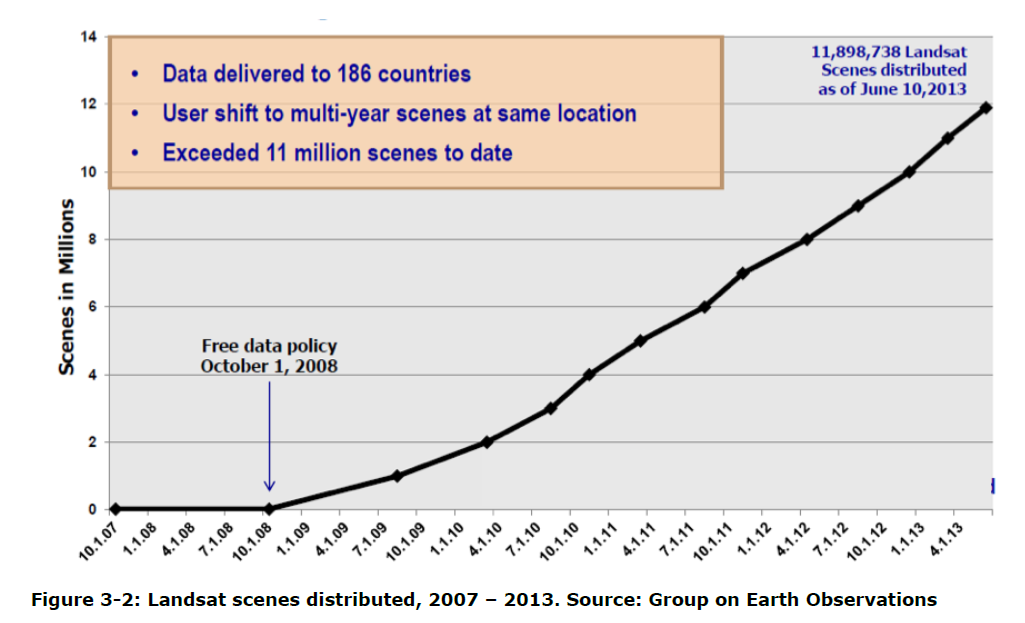

In the previous post, Olivier advocated for the open data policy in remote sensing. Although Oliver is facing some actual resistance because the Sentinel-HR mission would step on the toes of industrial champions, my feeling is that there is now a large consensus on this issue. The economic and social benefit of the open data policy in remote sensing is well accepted, especially in the scientific community. Yes, scientists are rational people and they prefer not to pay rather than.. to pay.

Talk about free data!

I think that the discussion should go beyond the cost of the data itself. Sentinel-2 generates 1.6 Terabytes of compressed raw image every day. It’s great that the data is free, but how do I handle that? Currently, a hard drive of 1 Tb costs about 50 €. Storing all Sentinel-2 data would cost me 30 k€ every year. Let’s assume my department tries to optimize the expenses by subscribing to a cloud storage service. Google provides an example of pricing for a storage usage of 160 Tb as well as bandwidth consumption that spans multiple tiers: it costs 7500 € per month, and the storage is largely insufficient for large scale processing of Sentinel-1 or Sentinel-2 data. This is clearly too expensive for many groups, not only research labs but also startup companies.

Of course I don’t need to store all Sentinel data, I could download them and delete the files once I’ve done the processing. Yet, processing data is costly, too. Amazon rates range from $ 94 / year to $ 2,367 / year (CPU time). As an example, the CPU time to generate snow and ice products over Europe from Sentinel-2 since 2016 was about 100 years of CPU!

These estimates of storage and processing costs are probably inaccurate but they give an order of magnitude. The conclusion is that although data is free, the cost of storage and processing is out of reach for many research projects, not to mention small businesses. This is why Google Earth Engine is a huge success in the remote sensing community (despite the warnings of some authors..). Recently, I was also struck by this sentence in the recent announcement of the release of Landsat collection 2:

« Collection 2 was processed in the Amazon Web Services (..) at a clip of 450,000 scenes per day—a speed that enabled the reprocessing of the entire archive in just five weeks. By comparison, it took 18 months to process Collection 1 in 2016, at a rate of 25,000 scenes each day. » (USGS, 01 Dec 2020)

Due to economies of scale, unit costs of storage and processing are significantly lower for Google and Amazon than for a small company or a university. But can we rely on Tech Giants to do public research? Is it sustainable for a startup company to build a commercial application based on Google Earth Engine[1] ? Should we build operational services for the monitoring of climate, agriculture, water resources, based on privately-owned data centers? The DIAS are an alternative to Google/Amazon but the cost are still to high for many users (we investigated this option in our lab to replace our current infrastructure).

A public cloud for the public good

I wonder how much would cost a free, public cloud server based on open source software to give everyone the possibility to tap into the power of the Copernicus data in a transparent and reproducible way?

The Copernicus 2019 market report indicates that « the EU has invested 8 billions € into this program from 2008 up to 2020 (…) Over the same period, this investment will generate economic benefits between EUR 16.2 and 21.3 billion (excluding non-monetary benefits). »

The EU has made the Copernicus Earth observation program a flagship of the European space policy thanks to the high quality of its satellite fleet and its open data policy. There is evidence that the open data policy is generating more « monetary benefits » than its investments. It is time to evaluate the cost of giving everyone the ability to freely process these data. Not to mention the potential economies of scales in terms of energy consumption by concentrating computation and storage.

« A public cloud for the public good » it sounds like a nice program, isn’t it?

[1] From Earth Engine FAQ: « Earth Engine’s terms allow for use in development, research, and education environments. It may also be used for evaluation in a commercial or operational environment, but sustained production use is not allowed. »